AI

GptNano

Joshua Gao · 27 Jun 2024

Inspired by Andrej Karpathy's video, I recreated GPT-2 and achieved performance that surpasses the original model. We then scrape Wikipedia for Civil Engineering-related articles to fine-tune the model, resulting in a specialized GPT-Nano that generates high-quality text in this domain.

Pre-train Dataset: Fine Web-Edu

OpenAI's GPT-2 was trained on the WebText dataset. This was created by scraping all outbound links from Reddit with at least 3 karma. The text was extracted from the HTML responses.

Fine Web-Edu dataset is a corpus of 1.3T high-quality tokens from educational web pages filtered from FineWeb dataset. This dataset is generally perceived as a higher-quality dataset than the WebText dataset.

To train GPT-Nano, we use the 10B token subset of Fine Web-Edu.

Evaluation Dataset: HellaSwag

To evaluate the how "human-like" our GPT-Nano model is, we use the HellaSwag dataset. This dataset consists of 70k multiple choice questions that are easy for humans (95.6% accuracy), but difficult for language models (29.55% accuracy for GPT-2).

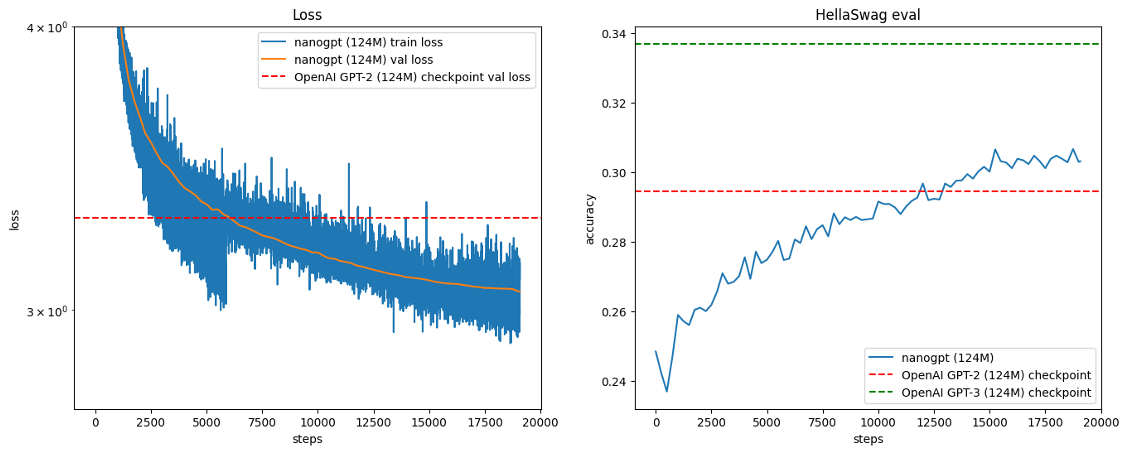

Training Run Results

The left figure is the Loss curve, and the right figure is the Accuracy curve on the HellaSwag dataset. The model achieves a final accuracy of 30.2%, which is significantly better than GPT-2's 29.55%, but not better than GPT-3's 33.8%.

This is impressive given that GPT-Nano uses the same architecture as GPT-2, but was trained on a much smaller number of tokens (10B vs 100B tokens).

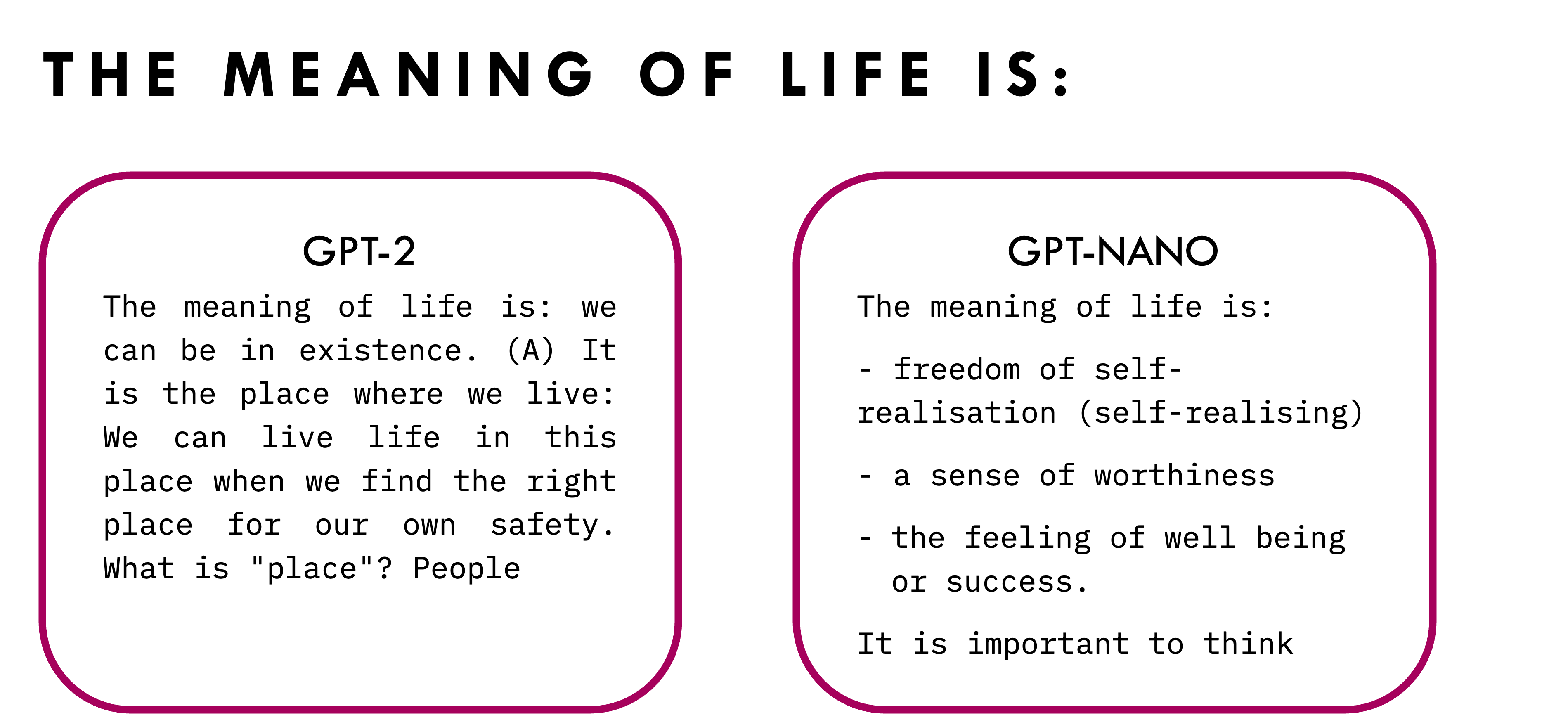

Qualitative

GPT-Nano does give a better response than GPT-2 to the prompt: "The meaning of life is:".

Civil Engineering Finetune

We scrape Wikipedia for all Civil Engineering-related articles to fine-tune the model by using the python wikipedia-api package. We use the category "Civil engineering" and all its subcategories with a depth of 2 to get a list of relevant articles to extract text from.

Neither GPT-2 nor GPT-Nano were able to correctly identify what Abram's Law is. However, GPT-Nano's response is much more relevant to Civil Engineering while GPT-2's response is completely irrelevant.